Reliable autonomy stacks from simulation to real hardware.

Robotics and autonomous systems engineer focused on ROS 2-based navigation, SLAM, perception pipelines, and edge inference on NVIDIA Jetson platforms. I build and integrate systems with a strong emphasis on reliability, testing, and safety-critical deployment readiness.

Impact Highlights

Metrics summarized from the case studies below (see “How we measured this” on each).

Skill Signal

Select a capability to see impact, tools, and the work that proves it.

Full-stack autonomy delivery

Orchestrating perception, mapping, simulation, and validation to ship reliable robot behavior.

Impact

Reduced field validation cycles by shifting autonomy testing into simulation-first workflows.

Toolchain

- ROS 2

- Nav2

- Omniverse / OpenUSD workflows

- Gazebo + NVIDIA Isaac Sim

- OpenCV

- PCL

- Docker

- CI pipelines

Evidence

- Real-to-Sim Pipeline

- Perception-Driven Navigation

- Simulation Validation CI

Featured Case Studies

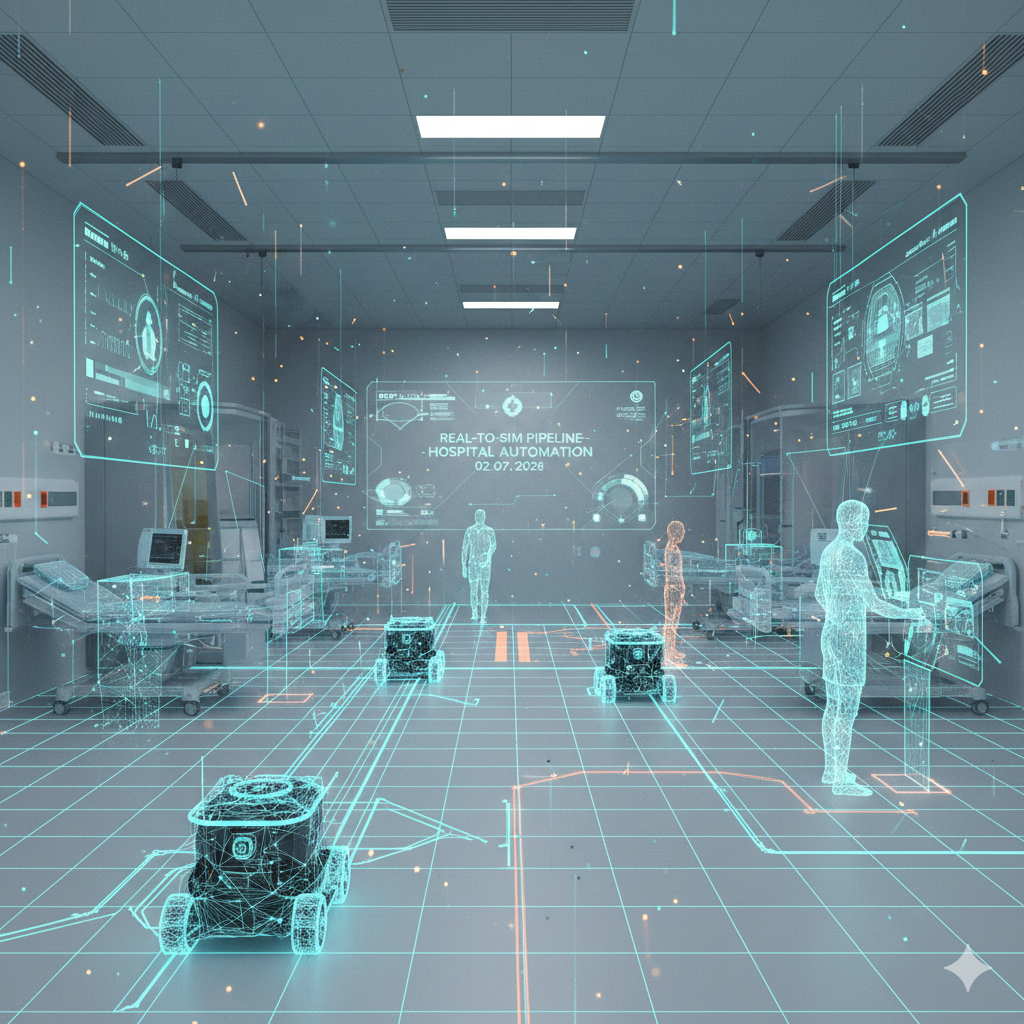

Demo clip available on request. Images shown are AI-generated stand-ins because I can't share the original customer footage.

Real-to-Sim Pipeline

Automated conversion of real-world scans into simulation-ready digital twins for AMR validation.

Digital Twins

Perception-Driven Navigation

Sensor-fusion navigation stack for dynamic, unstructured environments with human-aware planning.

ROS 2 + Nav2

Simulation Validation CI

Continuous validation infrastructure for autonomy stacks using headless simulation and KPI tracking.

CI + SimulationAdditional Projects

Autonomous Hospital Transport Robot

Jan 2024 – Dec 2024

Senior design project delivering hospital supplies with a ROS 2-based navigation stack, SLAM, and onboard perception.

Mood Music

Sep 12, 2023 – Oct 24, 2023

Web app that recommends Spotify playlists based on detected or selected mood signals.

Additional Engineering Systems (Private + Public)

2020 – Present

A collection of internal autonomy and perception systems spanning GPU-accelerated 3D mapping, ROS 2 middleware integration, SLAM experimentation, embedded robotics deployment, applied AI tooling, and full-stack engineering prototypes. Many repositories are maintained privately by default, with selected public work available on GitHub.

- Real-time 3D reconstruction and volumetric mapping pipelines

- ROS 2 stereo perception and navigation middleware

- Simulation-first SLAM and localization evaluation workflows

- Applied LLM and automation toolchains for autonomy research

- Embedded-to-GPU scalable robotics software systems

- Private deployments with code available upon request

Additional repositories are private by default and available for technical review upon request.

Core Competencies

Perception

Multi-sensor fusion across LiDAR, RGB-D, and IMU for robust scene understanding.

Mapping & SLAM

High-fidelity maps and localization with EKF-based state estimation and continuous refinement.

Integration & Verification

System-level testing and validation workflows that improve reliability before deployment in real environments.

Autonomy

Navigation, behavior orchestration, and mission logic for safe and efficient fleet operations.

About

Engineering Snapshot

I'm an Autonomous Systems Engineer at Rovex, focused on reliable autonomy software for healthcare robotics deployments. My work spans ROS 2 node development, navigation/localization, perception integration, and system-level verification with deployment readiness in mind. I earned my B.S. in Computer Engineering from the University of Florida after completing my A.A. at Santa Fe College.

Based in Gainesville, FL.

Toolbox

- ROS 2, Nav2, SLAM, EKF Localization

- Gazebo + NVIDIA Isaac Sim, RViz, OpenCV, OpenVINO

- C++, Python, Embedded C, JavaScript, MATLAB

- NVIDIA Jetson (Nano / Orin), CUDA

- Git/GitHub, AWS, SolidWorks, Altium, Quartus

Resume

Dany Rashwan

Autonomous Systems Engineer · ROS 2 · Navigation (Nav2) · SLAM · Perception

I build reliable autonomy software from simulation through on-robot deployment, with a strong emphasis on verification, traceability, and safety-focused integration.

About

Currently focused on healthcare robotics at Rovex, shipping ROS 2 navigation, localization, and perception software with simulation-first validation and disciplined deployment practices.

Core Skills

- End-to-end autonomy architecture for healthcare robotics

- Localization and SLAM robustness under sensor noise

- Perception integration and edge inference optimization

- Verification, traceability, and deployment readiness

Primary Focus

- NVIDIA Isaac Sim / Omniverse / OpenUSD

- Simulation-First Development

- ROS 2 system architecture (Nav2 + node orchestration)

- SLAM and localization reliability (EKF)

- Perception pipelines for LiDAR/camera systems

- Safety-focused validation and release readiness

Programming Languages

- C++ / C / C#

- Python

- Java

- JavaScript

- MATLAB

- Assembly

- VHDL

- SQL

- Bash / Shell Scripting

- HTML / CSS

Frameworks & Libraries

- ROS / ROS 2 middleware patterns (rclcpp, launch, tf2)

- Nav2 and Behavior Trees

- Robot description pipelines (URDF/Xacro)

- Sensor fusion pipelines (EKF, timing, calibration)

- OpenCV & Point Cloud Library (PCL)

- TensorFlow / PyTorch

- OpenVINO / TensorRT

Tools & Platforms

- Omniverse / OpenUSD toolchain

- Gazebo + NVIDIA Isaac Sim

- RViz / rosbag debugging workflows

- Docker

- Git / GitHub

- CI for headless simulation

- Jetson AGX

- Raspberry Pi / Arduino

- AWS

- SolidWorks / Altium / Quartus

- Automated environment generation

Familiar With

- NVIDIA Cosmos

- Metropolis VSS

- COLMAP

- 3D Gaussian Splatting

- fVDB/NanoVDB

Hardware & Embedded Systems

- Embedded C, microcontrollers, FPGA

- LiDAR & camera integration

- Sensor fusion & calibration

- PCB & hardware design

- Field‑Oriented Control (FOC)

- CAN bus communication

Technical Knowledge

- Digital Twins & Simulation

- Synthetic Data Generation (SDG)

- Real-to-Sim Pipelines

- Asset Pipeline Engineering

- Robotics & Autonomous Systems

- Perception & Autonomy Stack

Languages

- English (Native/Bilingual)

- Arabic (Native/Bilingual)

Autonomous Systems Engineer — Rovex Technologies Corporation,

Gainesville, FL Jan 2025 – Present

Autonomous Systems Engineer — Rovex Technologies Corporation,

Gainesville, FL Jan 2025 – Present

- Simulation & Digital Twins: Engineered high-fidelity digital twins in NVIDIA Isaac Sim, Omniverse, and OpenUSD workflows to validate autonomy stacks and perception pipelines before deployment, reducing field testing cycles by 13%.

- Navigation & Mapping: Built navigation and mapping pipelines that transform multi-sensor visual and depth data into simulation-ready 3D world models for hospital corridors and stretcher transport routes.

- Autonomy Stack Architecture: Architected and optimized a ROS 2 autonomy stack with Nav2, mission control, and behavior-tree orchestration, improving collision-free operation by 20.2% in mixed human-robot deployments.

- Clinical Workflow Impact: Supported AMR workflows that attach to standard hospital stretchers, reducing non-clinical transport burden so staff can return to bedside care faster.

- CI/CD for Robotics: Contributed to headless simulation CI with KPI tracking, catching 20%+ regressions before hardware-in-the-loop testing.

Selected technologies: ROS 2, Nav2, NVIDIA Isaac Sim, Omniverse, OpenUSD, OpenCV, PCL, Docker

Robotics Engineer Researcher — University of Florida

May 2024 – Aug 2024

Robotics Engineer Researcher — University of Florida

May 2024 – Aug 2024

- ROS 2 Development: Built custom ROS packages for robot control and navigation with Git-based workflows.

- SLAM & State Estimation: Tuned SLAM algorithms with EKF-based state estimation in Gazebo and NVIDIA Isaac Sim to improve localization consistency.

- Simulation Research: Developed and evaluated digital twin scenarios for mapping and perception stack validation.

- Research Collaboration: Communicated findings via presentations, technical reports, and architecture recommendations.

Selected technologies: ROS 2, Gazebo + NVIDIA Isaac Sim/Omniverse (OpenUSD), EKF, Git/GitHub

Machine Learning Engineer Intern — Flapmax, Austin, TX (Remote)

May 2023 – Aug 2023

Machine Learning Engineer Intern — Flapmax, Austin, TX (Remote)

May 2023 – Aug 2023

- Model Development: Engineered an MRI tumor detection model with ~95% accuracy and optimized inference using OpenVINO for 4x faster runtime.

- Privacy & Performance: Implemented Federated Learning strategies for privacy-preserving, decentralized model training on sensitive medical data.

Selected technologies: Python, TensorFlow, OpenVINO, Federated Learning

Software Engineer Intern — Snark Health, Nairobi, Kenya (Remote)

May 2022 – Aug 2022

Software Engineer Intern — Snark Health, Nairobi, Kenya (Remote)

May 2022 – Aug 2022

- Data Analysis: Applied K-means clustering to analyze large-scale healthcare datasets from Kenya and identify doctor-patient engagement patterns.

- Model Validation: Validated ML models and presented results at the FAI Summit 2022.

Selected technologies: Python, scikit-learn

Account Specialist — UF Help Desk, Gainesville, FL

Nov 2020 – Jun 2021

Account Specialist — UF Help Desk, Gainesville, FL

Nov 2020 – Jun 2021

- Resolved 100+ technical support tickets per week and managed directory data for 200+ staff.

Math Tutor — Santa Fe College, Gainesville, FL

Feb 2019 – May 2020

Math Tutor — Santa Fe College, Gainesville, FL

Feb 2019 – May 2020

- Provided academic support to 200+ students in algebra through calculus.

Autonomous Hospital Transport Robot Jan 2024 – Dec 2024

- UF Senior Design (CEN4908C): ROS 2-based autonomous mobile robot for hospital logistics that attaches to standard transport workflows.

- Built the hardware-software stack around Jetson Orin Nano, LiDAR, RGB-D cameras, IMU, Nav2, SLAM, RViz, and mission control nodes.

- Validated navigation and localization behaviors in Gazebo and NVIDIA Isaac Sim / Omniverse (OpenUSD) before hardware testing in hospital-like corridors.

Mood Music Sep 2023 – Nov 2023

- Web app that recommends a Spotify playlist based on detected or selected mood.

- Repository: github.com/dannirash/Mood-Music

- Tools: OpenCV, Flask, Python, Spotify API, HTML/CSS, JavaScript.

University of Florida, Gainesville, FL

Dec 2024

University of Florida, Gainesville, FL

Dec 2024

- Computer Engineering B.S. (Herbert Wertheim College of Engineering)

- Relevant coursework: CDA 3101 (Computer Organization), EEL 3701C (Digital Logic), EEL 4744C (Microprocessor Applications), COP 3530 (Data Structures & Algorithms), MAS 3114 (Computational Linear Algebra), CEN 3031 (Software Engineering), CEN4908C (Senior Design).

- Built the hardware-software rigor needed for real-time autonomy, EKF-based state estimation, and ROS 2 system design.

Santa Fe College, Gainesville, FL

May 2020

Santa Fe College, Gainesville, FL

May 2020

- Computer Engineering A.A.

- Transferred to UF Computer Engineering after completing core mathematics, physics, and engineering fundamentals.

Council Member — International Center Council, University of Florida Jan 2023 – Dec 2024

- Co‑founded the council with Dean Marta Wayne to advocate for 1,000+ international students.

- Provided strategic input for programs supporting international students at UF.

Marketing Director — Village Mentors UF Chapter, University of Florida Aug 2020 – Jun 2021

- Delivered mentoring and tutoring to under-resourced students and coordinated outreach.

Associate Mechanical Designer (Dassault Systémes) Aug 2020

- Certified proficiency in 3D CAD design using Dassault Systémes tools. Certificate Validation

NVIDIA GTC25 Physical AI Workshop Nov 2025

- Hands-on workshop on building and deploying AI-powered autonomous systems using NVIDIA Metropolis, Isaac Sim, and Omniverse/OpenUSD workflows.

Introduction to Quantum Computing LinkedIn Learning

- Completed foundational training in qubits, superposition, gates, and circuit-based quantum reasoning. Credential Listing

CRLA Certified Math Tutor Aug 2019

- Recognition by the College Reading and Learning Association. Learn more

Photography

Photography is where my visual systems thinking started. Composition, lighting, and motion studies directly inform how I design camera-LiDAR perception pipelines, feature selection, and robust scene understanding for autonomous robots.

See more on Instagram.

Game Hub

Side projects built for fun, experimentation, and interaction design. These game prototypes sharpen my real-time systems intuition outside of robotics work.

Gator Buster Jan 2021 – Apr 2021

Description: Arcade game where players bust maskless gators for points.

Repository: Gator Buster

Controls: Use SPACE or TOUCH.

Ping Pong Apr 2025

Description: Classic ping pong game built with p5.js featuring power-ups, AI, and timed mode.

Repository: Ping Pong Game

Controls: Q/A (Left), P/L (Right), T (Timed mode), ESC (Pause)