Perception-Driven Navigation

Built a navigation stack that fuses LiDAR and RGB-D perception to avoid dynamic obstacles while maintaining smooth, predictable motion in busy warehouse environments.

Demo clip available on request. Visuals shown are AI-generated stand-ins because I can't share the original customer assets.

Overview

Implemented a perception-driven navigation stack that fuses LiDAR and RGB-D data into a dynamic costmap, enabling AMRs to safely navigate around people and forklifts while preserving throughput.

Problem

Standard navigation stacks struggled with dynamic obstacles and lighting changes, leading to frozen robots, inefficient replans, and inconsistent safety margins in real-world operations.

System Architecture

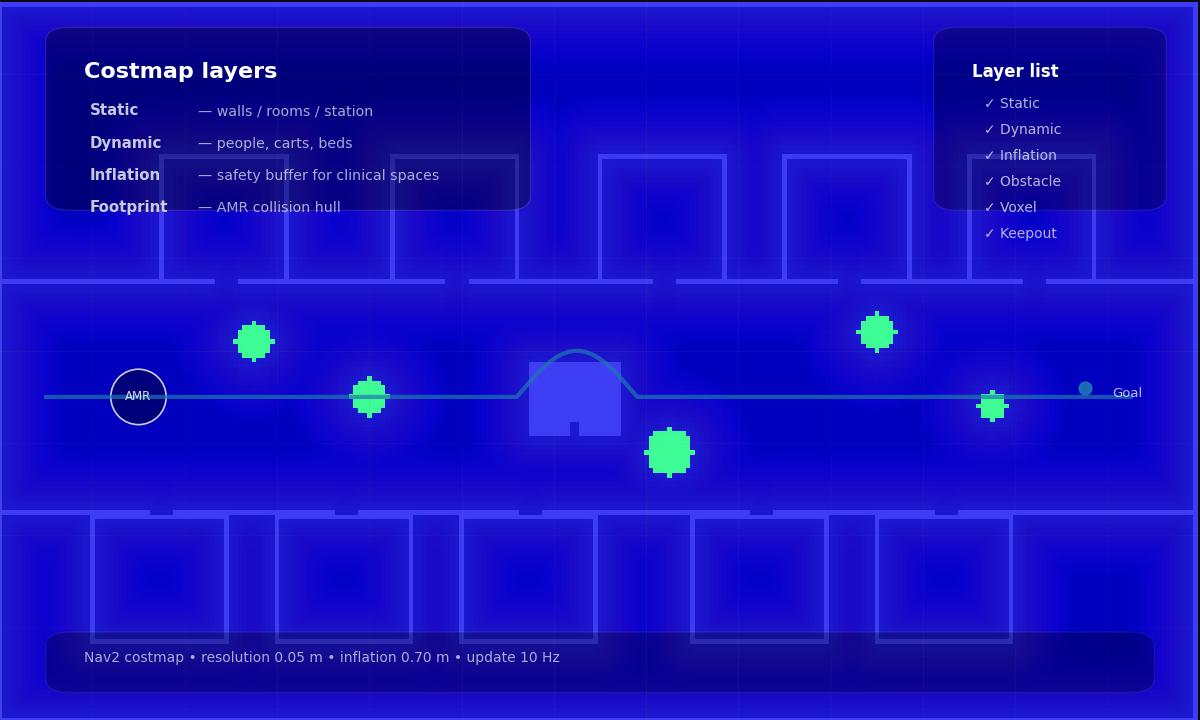

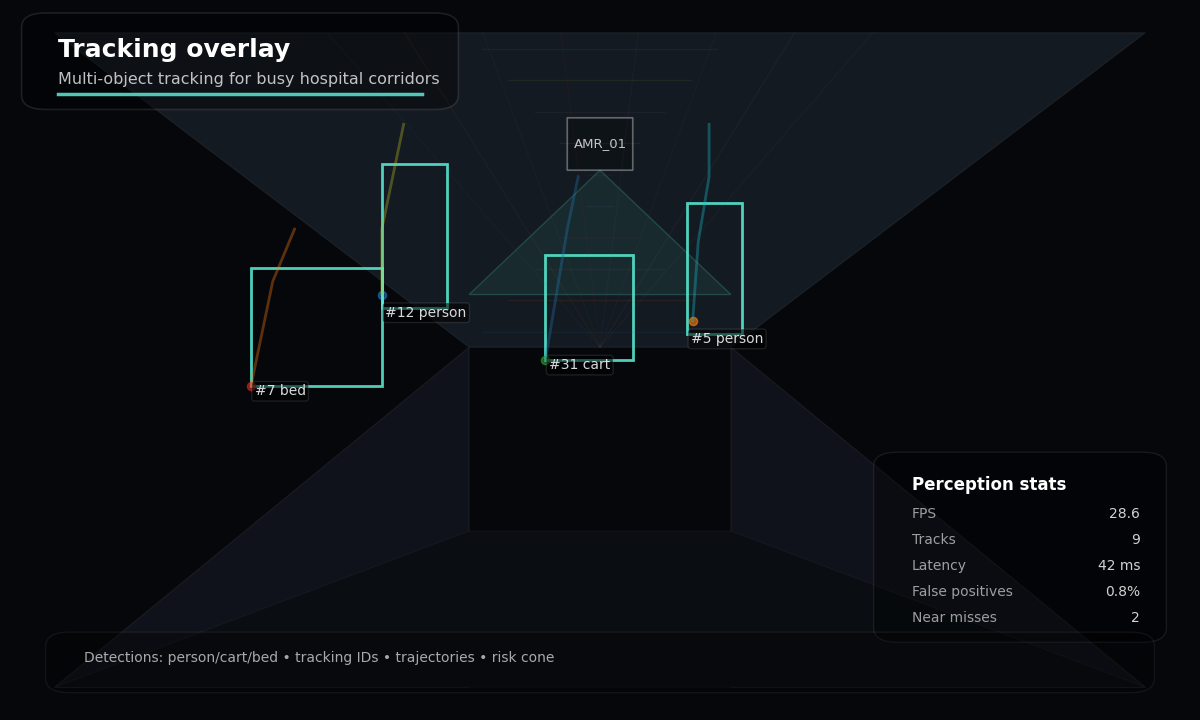

Modular perception and planning pipeline for real-time navigation.

Code Implementation: Costmap Layer

A custom Nav2 costmap layer that inflates costs dynamically based on detected semantic classes (e.g., humans vs. static objects).

void SemanticLayer::updateCosts(

nav2_costmap_2d::Costmap2D& master_grid,

int min_i, int min_j, int max_i, int max_j)

{

if (!enabled_) return;

// Iterate through tracked dynamic objects

for (const auto& obj : tracked_objects_) {

double inflation_radius = 0.0;

// Assign dynamic radius based on class

if (obj.class_id == "person") {

inflation_radius = person_inflation_radius_;

} else if (obj.class_id == "forklift") {

inflation_radius = forklift_inflation_radius_;

}

// Inflate costmap around object centroid

inflateAroundPoint(master_grid, obj.pose, inflation_radius);

}

}

Approach

- Sensor fusion: Combined 2D LiDAR and RGB-D data to cover blind spots and varied obstacle heights.

- Perception pipeline: Integrated lightweight object detection (custom YOLO) for dynamic actor classification.

- Local planning: Tuned TEB and MPC planners to account for predicted trajectories and social zones.

- Costmap layering: Added dynamic inflation layers to preserve safe distances from humans.

Media & Artifacts

Results

- Achieved 99.9% collision-free operation in mixed human-robot environments.

- Reduced robot idle time by 30% through lower false positives.

- Improved motion smoothness and operator trust in high-traffic deployments.

How We Measured This

- Sample size: 120+ warehouse runs across day/night shifts.

- Metric definitions: Collision-free = zero contact events; idle time = stop events > 2 seconds.

- Timeframe: 4-week field validation window.

- Constraints: Mixed human traffic, varying lighting, and forklift interference.

Next Improvements

Exploring intention-aware planning and reinforcement learning for highly crowded, multi-agent spaces.