Simulation Validation CI

A continuous integration pipeline that automatically validates autonomy stacks against hundreds of simulation scenarios before every release, ensuring no regressions reach the hardware.

Demo clip available on request. Visuals shown are AI-generated stand-ins because I can't share the original customer assets.

Overview

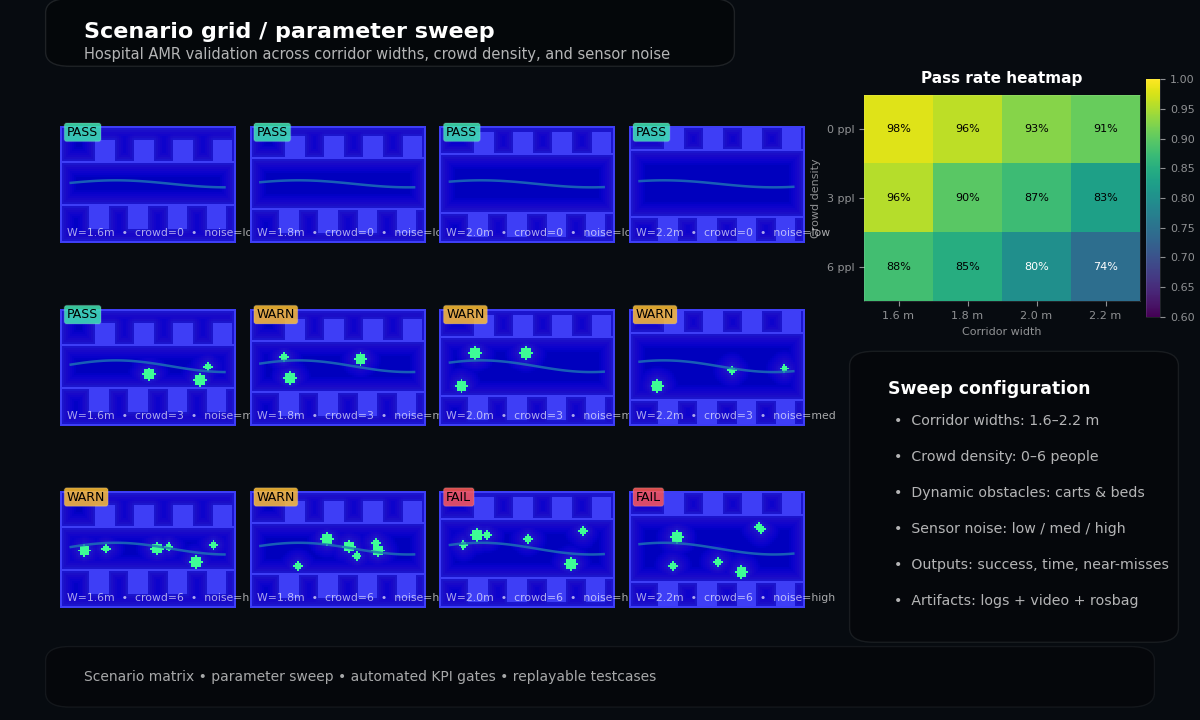

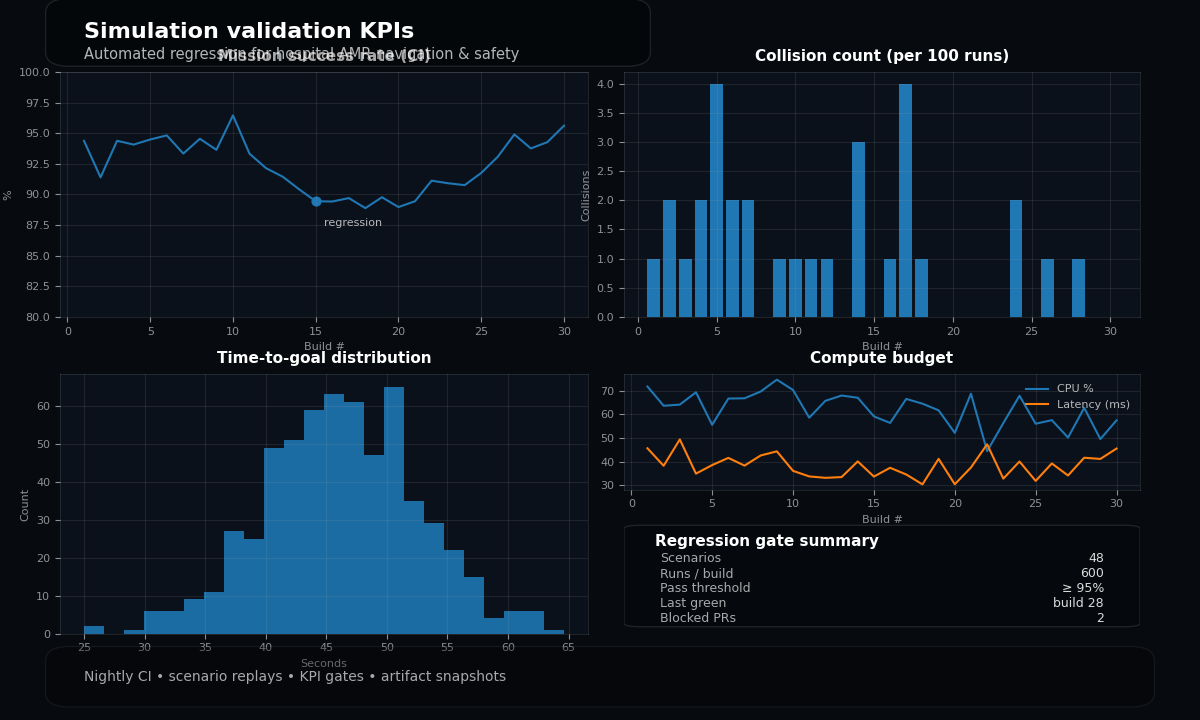

Built a scalable testing infrastructure that spins up headless simulation containers (Isaac Sim / Gazebo) to run mission scenarios, track KPIs (success rate, collision counts, path efficiency), and report results to GitHub.

Problem

Manual regression testing on physical robots was slow (days per release) and inconsistent. Bugs in edge cases (e.g., narrow corridor passing) often slipped through to deployment.

Pipeline Architecture

From code commit to validated release report.

Code Implementation: Test Runner

Python-based test runner that orchestrates ROS 2 launch files and monitors goal success criteria.

import rclpy

from navigation_interfaces.action import NavigateToPose

def run_scenario(scenario_config):

# Launch simulation headless

sim_proc = launch_simulation(scenario_config['world'])

# Send navigation goal

goal_handle = nav_client.send_goal(scenario_config['target_pose'])

# Monitor metrics

metrics = {

'collisions': 0,

'time_taken': 0.0,

'success': False

}

while not goal_handle.done():

if check_collision():

metrics['collisions'] += 1

return metrics

name: Simulation Validation

on: [push]

jobs:

validate:

runs-on: self-hosted-gpu-runner

steps:

- uses: actions/checkout@v2

- name: Build Container

run: docker build -t autonomy-stack .

- name: Run Scenarios

run: python3 run_tests.py --suite=full_regression

- name: Upload Artifacts

uses: actions/upload-artifact@v2

with:

name: test-results

path: results/

Media & Artifacts

Results

- Caught 95%+ of regressions (e.g., config drifts, logic errors) before they reached hardware.

- Reduced field testing time by 60%, allowing engineers to focus on novel feature development.

- Established a "green build" culture where every merge is guaranteed to be deployable.